Each of us composed of about 100 trillion cells, but only 10 trillion of these are human cells. About 90 trillion cells are micro-organisms, much smaller than animal cells. They sit happily on us or mainly inside us, the majority in the intestinal tract. The complexity is beyond current understanding and very few can be identified. Only a tiny minority can be grown outside the body. The huge numbers might be appreciated when we realise that dead bacteria make up about 95% of our faeces. There is very little food residue as our digestive system is extremely efficient and micro-organisms digest virtually all of the small amount that is left over.

The vast number of micro-organisms can be identified by their DNA. Each human cell has about 25,000 genes, 10,000 of which are shared by a banana! There are not enough genes to explain all our inheritance and functions, so that either more genes will be identified in the future or some genes will be found to have multiple functions. The micro-organisms of the gastro-intestinal tract of an individual have in total up to 3.3 million genes. It is likely that this genetic presence is of considerable importance to us and it certainly cannot be ignored. It is interesting to note that in a number of animals, and in particular in easily-studies insects, microbial parasites can influence behaviour to the advantage of the parasite. It has been suggested that in human beings the development of the later stages of syphilis cause a behaviour change to a higher level of less discriminative sexual activity so as to enhance the spread of the causative parasite, Treponema pallidum.

Our microbiota might have interesting effects on body function in ways that are not yet fully appreciated. It becomes part of our inheritance. This is a very active research area.

We inherit a great deal from our parents, but not all of it is genetic. Think of money, social background, place of residence etc. But we also inherit micro-orgnanisms and most of these come from our mother. It has been assumed that the foetus, safely within its amniotic sac, is sterile, which of course means 100% human. However recent research suggests that there might be transfer of micro-organisms across the placenta. This is not altogether surprising as we know that certain micro-organisms can seriously damage the foetus. Historically the most important has been syphilis, and more recently rubella, and very rarely toxoplasma and listeria.

But the foetus is generally well protected. It is during the process of birth that the baby comes into contact with its mother's micro-organisms. An outstanding example of this is Hepatitis B virus, which is transmitted during birth following mixing of blood, but these days the baby can be given immunological protection. This has been a particular problem in China where a significant proportion of the population carries the hepatitis B virus.

The vast majority of the micro-organisms that the baby will acquire from its mother during the next few months or years do no harm at all, and they form an important part of the body. They will do no harm as long as the body can defend itself against them, that is keep them in their place. Part of this involves physical barriers. These are metabolically active and depend on blood flow and associated metabolism, the vitality of the body. The skin is a strong barrier but it is easily damaged. The intestine has a much weaker barrier, but defence of the gastro-intestinal tract is helped by the acidity of the stomach. The vagina also produces defensive acid. When we die the barriers break down and the organisms take over.

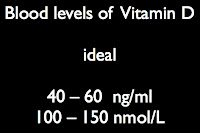

The immune system is designed to protect us from micro-organisms that might have passed the physical barriers. It vitally important but there can be failures. Rarely there might be a genetic defect of immune activity. HIV is an acquired form of immunological failure. Much more common is vitamin D deficiency. A bacterium such as the one that causes tuberculosis might lie dormant on or in the body, but failure of immunity due to shortage of vitamin D might lead it to become active and cause disease.

|

The micro-organisms are always poised to take over.