In a recent Post I indicated that the maximum life-span of a human being is about 105 years, a life longer than this being very exceptional. However a shorter life-span is common due to individuals encountering premature life-ending events such as major injury or disease. If these are not encountered, then life will ultimately come to an end because of “old age”. Death from old age is the result of frailty or a lack of physiological reserve, effectively a combination of mitochondrial failure and an exhaustion of stem cells.

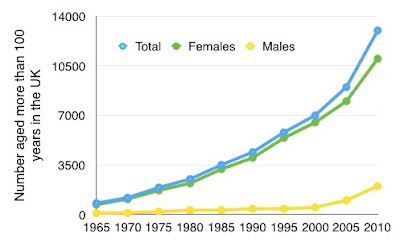

Average life expectancy has been increasing in past decades during which more and more people have been approaching the maximum life expectancy, but most have not quite got there. We can see for example a steady increase in the UK of people living beyond their 90th birthday. This is no sign yet of this slowing down.

|

| Figure 1. 90 year olds in the UK |

Up to about 1870, average life expectancy in Europe and America was about 40 years, and this was due to a large number of deaths in childhood. One of my great grandfathers, Richard Alston (who I never met), was the youngest of twelve children born on a farm in the Hodder Valley, very close to where I live now (I can see the farm-house from my bedroom window). Of the twelve children, only four survived to be adults. Richard moved to Manchester, where he married and had ten children, just four surviving to be adults.

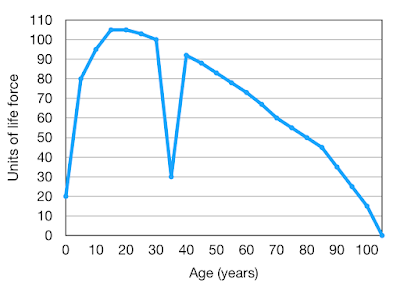

Such stories were usual but nevertheless tragic. In 1840 in the UK, average life expectancy from birth was only about 42 years. But if a child managed to survive to the age of 10 in 1840, then the average life expectancy would be about 57 years. This is shown in Figure 2.

|

| Figure 2. Life expectancy by age |

We can see in Figure 2 increases in life expectancy within the 20th century, especially life expectancy from birth. The major improvement predated the antibiotic era, which started after 1945. It would almost certainly be the result of civil engineering changes, new housing with wider streets, sanitation and waste disposal, piped clean water, more food, domestic heating with delivered coal, improved schooling. This has been a world-wide phenomenon, as shown in Figure 3.

|

| Figure 3. Changing life expectancy throughout the world |

There would not have been many 70 year olds in 1840 (born 1770) and their life expectancy beyond the age of 70 was on average only about two years. The life expectancy of 70 year olds changed little during the century 1840 to 1940 (Figure 2).

However there was an improvement seen in 1980 and thereafter. This was the first time that there was an increased life expectancy of 70 year olds, of course resulting in the great increase in those living to beyond their 90 birthday (Figure 1). This was the result of the end of the epidemic of high-mortality CHD (coronary heart disease) and a major decline of deaths from this cause.

As well as major improvements in infant mortality rates in industrialised countries worldwide, the latter half of the 20th century also saw a dramatic worldwide reduction of maternal deaths. In the 21st century this has also happened in the non-industrial world, for example Ethiopia. This is shown in Figure 4.

Since 1980 there has been a steady increase in life expectancy , and this is shown in the countries making up the UK. This is seen in males and females, as in Figures 5 and 6.

|

| Figure 5. Life expectancy in UK nations (Males) |

|

| Figure 6. Life expectancy in UK nations (Females) |

This dramatic recent improvement in life expectancy has been mainly the result of the end of the epidemic of CHD. The improvement could not be expected to continue indefinitely, and there has been a levelling-off of the decrease during the past couple of years. This is expected and a new steady state is inevitable. But there appears to be a marginal decrease in life expectancy in Scotland and perhaps Northern Ireland in 2017.

There is one disturbing fact: There has been an increase in lung cancer deaths among people who have never smoked, and 80% of these are women, often quite young. Deaths from lung cancer in women who have never smoked now exceed deaths from breast cancer and ovarian cancer combined. The reason for this is unknown.

If we look back at Figure 3 we can see that the increase in average life expectancy in African countries was interrupted by a reduction between 1990 and 2000. This would be explained by the epidemic of AIDS related deaths occurring at that time. There does not appear to be a cause for the apparent reduction of life expectancy in Scotland and Northern Ireland, and perhaps it is just an aberration that will have disappeared at the next annual report.

The end of the epidemic of CHD (coronary heart disease) has had a major beneficial effect on average life expectancy in the UK. If we encounter another epidemic, then the average life expectancy will obviously diminish.

The end of the epidemic of CHD (coronary heart disease) has had a major beneficial effect on average life expectancy in the UK. If we encounter another epidemic, then the average life expectancy will obviously diminish.

Otherwise the average life expectancy should maintain a steady state. This will change if one of two things happens. First, if there is a rapid a significant reduction of deaths from another given and important cause of premature death (as has been the case with CHD deaths), then average age at death will increase. What diseases are likely to diminish?

If on the other hand there develops a sudden increase in deaths from a specific disease, an epidemic of something new, then average age at death will diminish.

It is unlikely that deaths from “all causes” will either diminish or increase to any significant degree. It is the change in the death rates from specific diseases that are much more likely to be significant.

|

| Hodder Valley, Lancashire, UK. |